Abstract

Large scale Vision-Language (VL) models have shown tremendous success in aligning representations between visual and text modalities. This enables remarkable progress in zero-shot recognition, image generation & editing, and many other exciting tasks. However, VL models tend to over-represent objects while paying much less attention to verbs, and require additional tuning on video data for best zero-shot action recognition performance.

While previous work relied on large-scale, fully-annotated data, in this work we propose an unsupervised approach. We adapt a VL model for zero-shot and few-shot action recognition using a collection of unlabeled videos and an unpaired action dictionary. Based on that, we leverage Large Language Models and VL models to build a text bag for each unlabeled video via matching, text expansion and captioning. We use those bags in a Multiple Instance Learning setup to adapt an image-text backbone to video data.

Although finetuned on unlabeled video data, our resulting models demonstrate high transferability to numerous unseen zero-shot downstream tasks, improving the base VL model performance by up to 14%, and even comparing favorably to fully-supervised baselines in both zero-shot and few-shot video recognition transfer.

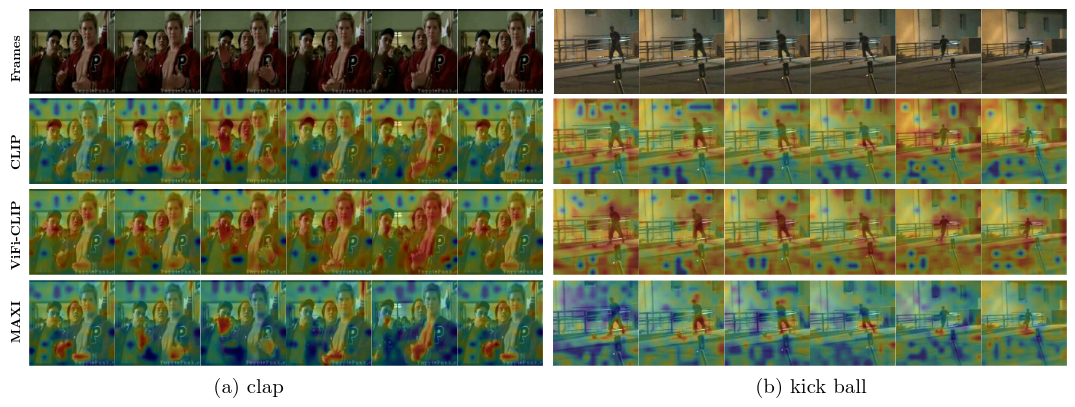

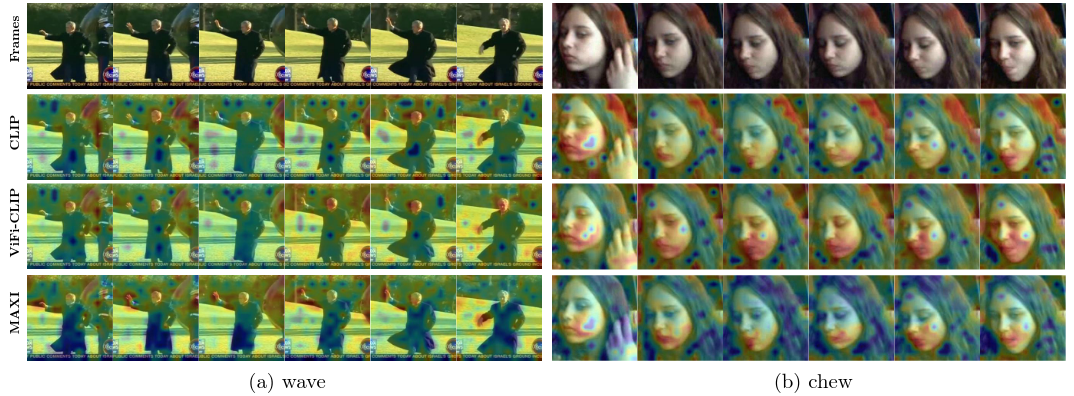

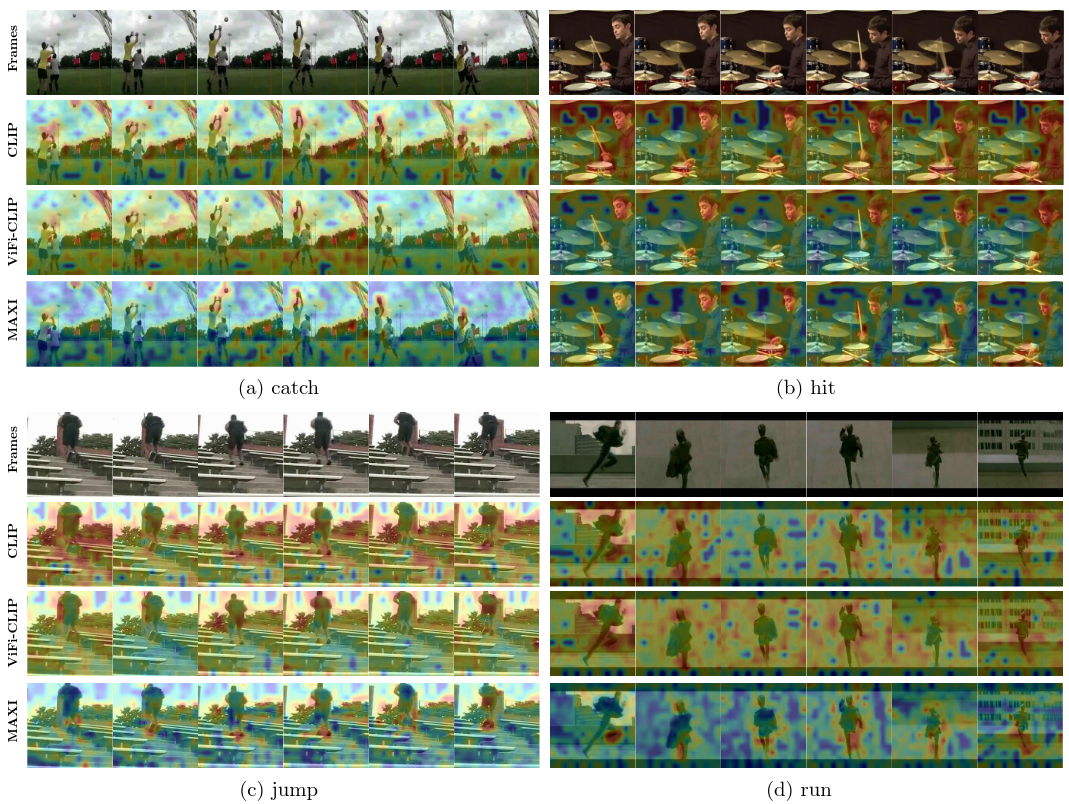

Attention Heatmaps

Attention heatmaps on actions which have a verb form (lemma or gerund) directly included in the predefined action dictionary. We compare among CLIP (2nd row), ViFi-CLIP (3rd row) and our MAXI (4th row). Warm and cold colors indicate high and low attention. MAXI has more focused attention on hands (for clap) and legs (for kick ball).

Attention heatmaps on actions which do have any verb form included in the predefined action dictionary. MAXI has more focused attention on hand and arm for wave, and on the area of mouth for chew.

Attention heatmaps on general actions whose verb form is a basic component of several actions in the predefined action dictionary. MAXI has more concentrated attention on the part where the action happens, e.g. catching ball with hands, hitting drum with stick, legs and feet jump on stairs, and attention on the running body.

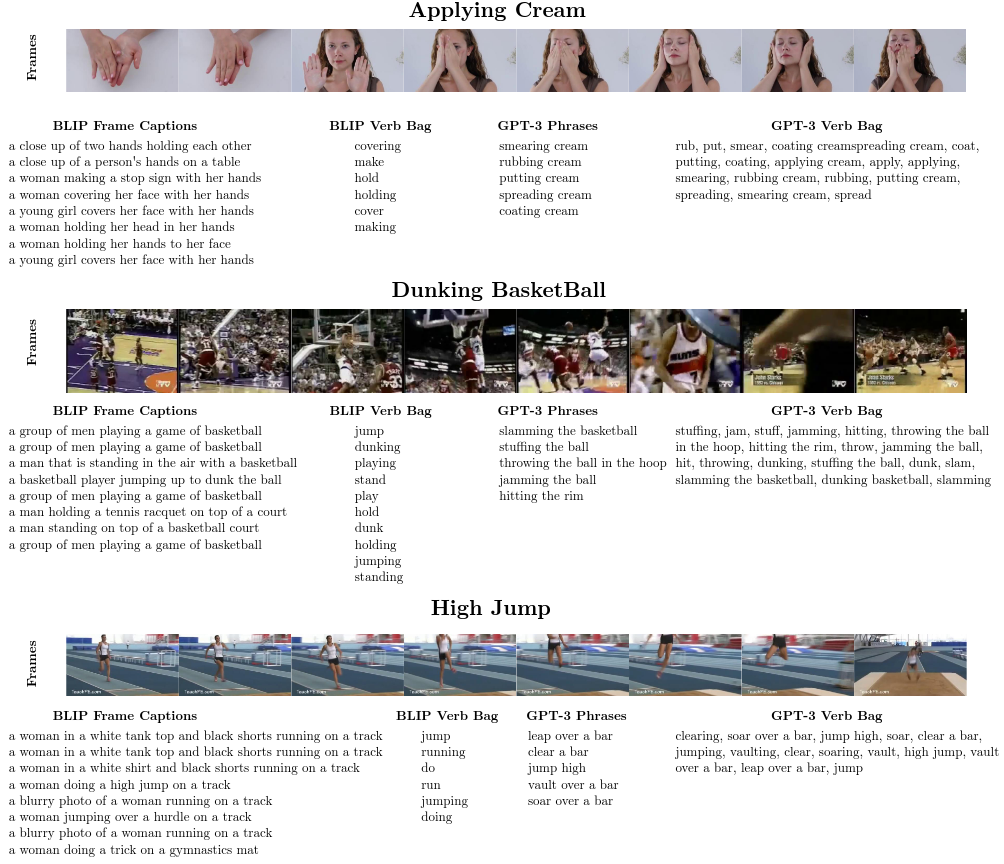

Examples of Text Bag

Examples of video frames, BLIP frame captions, GPT-3 phrases, together with the derived BLIP verb bag and GPT-3 verb bag. The videos are from the K400 dataset.